As Rightworks has such a large number of targets that we are monitoring with Continuous tests (currently 822 on one of our appliances), we decided to redesign our implementation and rebuild from scratch. As you can imagine, recreating 822 tests, 556 accounts and 74 applications is a huge amount to do manually. Thankfully, there is an API that we can use to script moving most items from one appliance to the new ones.

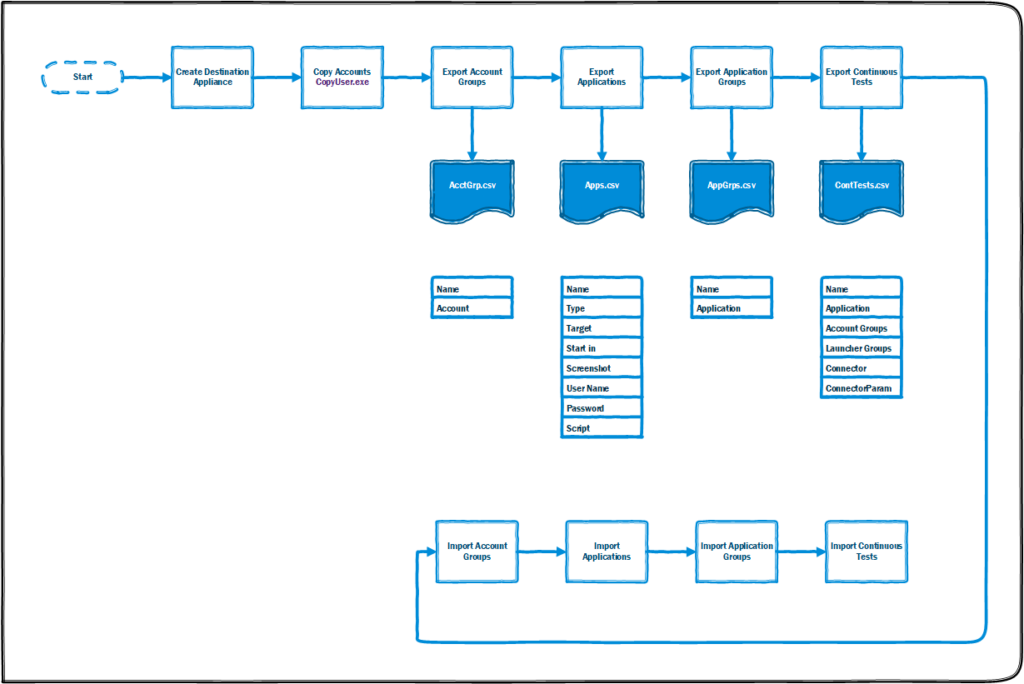

When I first started planning out the migration, I planned on exporting every item to text files and then modify these text files to pare down which objects are imported into each new appliance. The plan looked something like this:

This plan was a little daunting as, at that time, I was not that comfortable with the Login Enterprise API and using Powershell to interact with the API to extract and insert the data. The API can be confusing as to what is required to make the specific calls. I did find this article Powershell Script with Functions to control and extract data from Login Enterprise – Login VSI very helpful in understanding the API. Its a good reference (especially for a non-coder like me) but keep in mind that the example functions are written with version 4 of the API. You will need to change the version in the URL and some syntax has changed. For instance, if the function asks you for a sort order, v4 specified Ascending or Descending, but newer versions require that you use Asc or Desc.

One other issue that I ran into was migrating the test accounts. The API does not allow you to retrieve the password used to logon to the targets. This is for security reasons, and while I find it frustrating (I own the accounts) I can see why it would not be wise to allow those to be accessed. I even found the appropriate table in the SQL database that holds the passwords, but they were encrypted.

I reached out to Login VSI and they were kind enough to create a utility for me that would copy the accounts from one appliance to another for me without exposing the passwords. (Thanks, guys!)

A new approach

Due to other initiatives and circumstances, this project was delayed for almost 9 months. Thankfully, this gave me time to rethink the process. Another big advantage is that we have created a new naming convention for all objects which will specify the ‘pods’ they will be used with.

Accounts

First, since Login Enterprise has a Bulk Creation feature for accounts, we did not need to copy those over from the old appliance, especially since they will all have new names anyway. This simplified the process significantly and we still did not need to do a lot of manual steps and avoided fat fingering things.

Applications

Again, it is much easier to manually upload the few scripts that each pod will test with, rather than trying to extract and insert these through a script.

NOTE: Another deciding factor for this was that when you extract the script from Login Enterprise, it inserts the word ‘default’ into the name of the script. It also renames the script to the name of the Application in the management console, which breaks our versioning and our repos.

Another factor was that, in splitting up the big appliance into 4 four pods, we have segregated which applications are being testing in each pod. Actually, three of the new pods are dedicated to one application suite and the last one runs three application suites.

Launchers

These were brand new VMs and will be dedicated to a specific pod. The old appliance had 44 launchers. In order to ensure that we did not overwhelm the launchers, I wrote a script that wrote out the current sessions on each launcher to a CSV file. It did that every 5 minutes and I let it run for a full day. This let me see what the capacity of each launcher was during the day. For this environment, the launchers never went above 10 concurrent sessions, most average 6-7 concurrent sessions. As each launcher should be able to handle 20-30 concurrent sessions (I looked for the documentation for this but cannot find it) we should be able to use 11 launchers per pod, thus not requiring any additional launchers.

Continuous Tests

So the biggest issue for us was moving the Continuous tests in to the new pod. A little background here. We host our applications on Windows Session Hosts. We have our own application portal (named AppHub) that provides our users with an .RDP file to connect to a server in their assigned pool. So, our Continuous tests are setup such that there is a separate test for each RDSH server and uses the Microsoft RDS connector to connect to a specific host.

So our approach is to manually create one test for each application suite and fully configure it (Account Group, Launcher Group, Application Group, schedule, thresholds, etc.). Then we wrote a Powershell script to read a list of target servers. For server in the file, it uses the LE API to copy the existing test and then changes the host to connect to.

Again, this is a much simpler approach, since we didn’t have to write a script to pull all of the various bits from the API, especially as that data is scattered across many different tables and the process to extract information on even a single test gets fairly complex.

One other thing to note. The example function to update a test, which is provided in the article I mentioned above, requires that you copy all of the parameters of a test and then write them all back, even if you want to change just one parameter. So, in order to reduce possible corruption and issues, we decided to take a different tack. We wrote a SQL statement that would modify the appropriate column for each record to update the host name.

However, after we had written and tested the SQL function, it was pointed out, that in the 7.0-preview version of the API and new function was added called ConnectionResources that can be used to update or view the connection parameters of a given test. But, since this wasn’t documented, we did not know of this function until after we had composed the SQL statement and wrestled it into a Powershell script.

Results

I happy to report that, as of the time of this writing, we have completed creating Pod 1. We are working out some kinks with getting our AD accounts setup with the application configuration and data they require to run the applications scripts and should be turning on all of the tests by the end of this week! :

If anyone would like to see some of the scripts we created, either the one we used or the ones we didn’t, feel free to reach out to me and I’d be happy to provide them.